What Is The Difference Between AI and

Robotics? Written by Bernard Marr

What is artificial intelligence (AI)?

Artificial intelligence is a branch of computer science

that creates machines that are capable of problem-solving and learning

similarly to humans. Using some of the most innovative AIs such as machine

learning and reinforcement learning, algorithms can learn and modify their

actions based on input from their environment without human intervention.

Artificial intelligence technology is deployed at some level in almost every

industry from the financial world to manufacturing, healthcare to consumer

goods and more. Google’s search algorithm and Facebook’s recommendation engine

are examples of artificial intelligence that many of us use every day. For more

practical examples and more in-depth explanations, cheque out my website

section dedicated to AI.

|

| DALL·E-2022-12-14-00.19.28-humanoid-robot-creating-art-on-a-canvas-with-many-colors-in-art |

What is robotics?

The branch of engineering/technology focused on

constructing and operating robots is called robotics. Robots are programmable

machines that can autonomously or semi-autonomously carry out a task. Robots

use sensors to interact with the physical world and are capable of movement,

but must be programmed to perform a task. Again, for more on robotics cheque

out my website section on robotics.

Where do robotics and AI mingle?

One of the reasons the line is blurry and people are

confused about the differences between robotics, and artificial intelligence is

because there are artificially intelligent robots—robots controlled by

artificial intelligence. In combination, AI is the brain and robotics is the

body. Let’s use an example to illustrate. A simple robot can be programmed to

pick up an object and place it in another location and repeat this task until

it’s told to stop. With the addition of a camera and an AI algorithm, the robot

can “see” an object, detect what it is and determine from that where it should

be placed. This is an example of an artificially intelligent robot.

Artificially intelligent robots are a fairly recent development. As research

and development continue, we can expect artificially intelligent robots to

start to reflect those humanoid characterizations we see in movies.

Self-aware robots

One of the barriers to robots being able to mimic humans

is that robots don’t have proprioception—a sense of awareness of muscles and

body parts—a sort of “sixth sense” for humans that is vital to how we

coordinate movement. Roboticists have been able to give robots the sense of

sight through cameras, sense of smell and taste through chemical sensors and

microphones help robots hear, but they have struggled to help robots acquire

this “sixth sense” to perceive their body.Now, using sensory materials and

machine-learning algorithms, progress is being made. In one case, randomly

placed sensors detect touch and pressure and send data to a machine-learning

algorithm that interprets the signals.

In another example, roboticists are trying to develop a

robotic arm that is as dexterous as a human arm, and that can grab a variety of

objects. Until recent developments, the process involved individually training

a robot to perform every task or to have a machine learning algorithm with an

enormous dataset of experience to learn from.

Robert Kwiatkowski and Hod Lipson of Columbia University

are working on “task-agnostic self-modelling machines.” Similar to an infant in

its first year of life, the robot begins with no knowledge of its own body or

the physics of motion. As it repeats thousands of movements it takes note of

the results and builds a model of them. The machine-learning algorithm is then

used to help the robot strategize about future movements based on its prior

motion. By doing so, the robot is learning how to interpret its actions.

A team of USC researchers at the USC Viterbi School of

Engineering believe they are the first to develop an AI-controlled robotic limb

that can recover from falling without being explicitly programmed to do so.

This is revolutionary work that shows robots learning by doing.Artificial

intelligence enables modern robotics. Machine learning and AI help robots to

see, walk, speak, smell and move in increasingly human-like ways.

1) Almost human:

The world’s first robot artist in her own words:

Named after Ada Lovelace, the pioneering 19th century mathematician, and featuring a hyper realistic humanoid head and facial features, it's easy to see why robot artist Ai-Da is creating such a visceral human reaction from her critics and fans alike.

|

| Ai-Da and creator Aidan Meller [left] stand with one of her artworks |

Ai-Da, created by gallery director Aidan Meller, is the

world's first realistic humanoid robot artist, capable of drawing people from

life using her eye and a pencil. Her work has already garnered attention from

art collectors, with the entirety of her upcoming solo exhibition, 'Unsecured

Futures', sold to collectors for a total of more than £1m.

|

| Ai-Da with Her Paintings |

In person, Ai-Da is remarkably lifelike until you take in her robotic torso and arms. Her face has character, both in its structure and impressive range of expression – including meeting your eyes when speaking – and her Oxford-accented voice is sweetly pitched, feminine and unthreatening. Her vocal range is equally idiomatic, showing a range of rising and falling intonation that mimics British speech patterns. In fact, the only robotic nature of her voice is in the slight delay between clauses, as if pausing between lines of a poem.With such wonderful, uncanny technologies at her disposal it is very, very easy to think of this machine as a living thing.“I am very pleased you have come to see my artwork,” Ai-Da tells our team when we meet in Oxford to shoot our cover image. “My purpose is to encourage discussion on art, creativity and the ethical choices on new technologies and our future.”

Our photographer, who has worked with models and

celebrities alike, asks whether Ai-Da enjoys being photographed. “I like that

photographs of me inspire discussion in audiences,” she says.

Ai-Da is a natural in front of the camera. One of her

most interesting works in the Unsecured Futures collection is a video homage to

Yoko Ono’s 1964 performance Cut Piece, in which members of the audience take

turns cutting small pieces from the artist’s clothing. Ai-Da’s tribute, entitled

Privacy, involves placing clothing on the robot, eventually hiding her

‘other-ness’ and raising questions about the nature of privacy.“My favourite

artists are Yoko Ono and Max Ernst,” Ai-Da tells Tempus. “My favourite artwork

is Picasso’s Guernica. It was a cautionary painting of the 20th century and

some of those warnings are still relevant today.

Creator Meller explains the importance of Japanese artist

Yoko Ono’s impact on the project: “Yoko Ono’s artworks and activism throughout

the 1960s make her an incredibly significant artist. We took inspiration from

Ono and wanted to engage with the world we’re in, in a similar way, even though

we’re making a very different point about privacy.”With such existential issues

at the forefront of Meller’s work, it’s perhaps unsurprising that audiences

have reacted so strongly to Ai-Da and her work.

“The fact is, she’s a robot with very human features, and

people have been nervous about what she’s thinking, whether she’s safe, whether

this is a sign that robots will take our jobs. There is a lot of insecurity

around what Ai-Da and her work represents,” he says. “Yet, people have also

brought Ai-Da into discussion about human identity – why is she female? How

could humans and technology be combined? Is transhumanism, or super-humanism,

something we should be talking about?

|

| A mix of advanced robotics and groundbreaking algorithms, Ai-Da is a piece of art in herself |

“Then we have questions about the environment and privacy. Where does technology come into play in these areas – and where should it? We’re grappling with so much; it’s a juggernaut,” he says. “Obviously, Ai-Da is an avatar. There’s a persona. She’s real but she’s a fiction, as well. So, who is she? People really resonate with that. This really is only the beginning of our plans.”How this combination of art and AI continues to raise questions of ethics, privacy and identity, all while showcasing the extreme advancements of the UK’s robotics industry and programming capabilities, cannot be understated. But it also highlights the debate of whether these incredible advancements will be a boon or burden in the years to come. We might be living in the future but, as Ai-Da continues to ask, can that future ever be secure?

|

| A. I. Controlled Robot as Painter |

Which AI Creates the Best (and Most Terrifying) Art? by By Eric Griffith

The tech world never lacks trending topics, but one of the most interesting this year is the use of artificial intelligence (AI) to create art. The resulting images can be everything from grotesque to stunning.

Here's an ultra-simplified explanation of how they work:

Take millions, if not billions, of captioned images, and generate something new

and unique based on a text description you provide, called a

"prompt." (This article(Opens in a new window) provides a more

detailed breakdown of the process.)

Recently, AI tools have created memes (read about Loab,

the “AI art cryptid,”(Opens in a new window) for some genuine chills). They've

generated the imagery of an entire sci-fi short film and a video game and even

won art contests.

Some call AI art a whole new artistic medium(Opens in a

new window). Arguably the most popular is Dall-E, which is now used to create

as many as 2 million images per day(Opens in a new window) alone. Few

safeguards exist against using these AIs for nefarious purposes (think

propaganda and disinformation). But that’s not going to stop people, especially

with the truly open-source options.

Several big-name tools that were in private beta have

become available to everyone, including the aforementioned Dall-E as well as

Midjourney and DreamStudio. New mobile apps such as Wonder, Dream by Wombo, and

Starryai are also available. Even big tech companies—namely Meta and Google—are

in on it. Both have announced tools that will go beyond still imagery and make

AI-generated videos, named Make-A-Video and Imagen Video, respectively.

As the big companies hesitate, it gives smaller, privately owned AI art generators a chance to show off their wares well ahead of the competition. Some have heavy parental-type restrictions and content policies in place to prevent issues; some can be circumvented(Opens in a new window). At least one that we tested (Wonder) popped up some unexpected, full-frontal female imagery—tastefully rendered, but still not NSFW. It's also a hot-button issue for artists(Opens in a new window), some of whom are getting copied by the AIs(Opens in a new window). They may be losing their livelihoods.

Some of the imagery from these AI tools is breathtaking. There’s a reason that a person won a state fair art contest using Midjourney. It's still a lot of work—he spent 80 hours honing his art prompt, plus he still had to use extra software tools like Adobe Photoshop and Gigapixel AI(Opens in a new window) to enhance the original AI image.

After seeing some cool images generated from next to nothing, we tested the top five AI art generators with free access versions to see what they could generate using the same prompts: Dall-E, Midjourney, DreamStudio Lite, Craiyon, and Wonder. The result is a direct, if subjective, comparison. Read on to see the stunning effects.

What is a GAN?

Most of the time, art pieces that are generated by

AI-based algorithms involve GANs. With a GAN, two sub-models are trained at the

same time. The first is a generator model that is trained to generate new

examples, and the second is a discriminator model that attempts to classify

examples as either real or fake. The two models are trained simultaneously

until the discriminator model is tricked about half of the time. Once this

occurs, the generator model is generating plausible examples.

|

| A.I. Controlled Movie Maker Robot |

2) A.I. is here, and

it’s making movies. Is Hollywood ready? BY BRIAN CONTRERAS

|

movie-producing AI soft |

The writer-director had spent production on “Fall,” his vertigo-inducing thriller about rock climbers stuck atop a remote TV tower, encouraging the two leads to have fun with their dialogue. That improv landed a whopping 35 “f-cks” in the film, placing it firmly in R-rated territory.

But when Lionsgate signed on to distribute “Fall,” the

studio wanted a PG-13 edit. Sanitizing the film would mean scrubbing all but

one of the obscenities.

“How do you solve that?” Mann recalled from the

glass-lined conference room of his Santa Monica office this October, two months

after the film’s debut. A prop vulture he’d commandeered from set sat perched

out in the lobby.

Reshoots, after all, are expensive and time-consuming.

Mann had filmed “Fall” on a mountaintop, he explained, and struggled throughout

with not just COVID but also hurricanes and lightning storms. A colony of fire

ants had taken up residence inside the movie’s main set, a hundred-foot-long

metal tube, at one point; when the crew woke them up, the swarm enveloped the

set “like a cloud.” “‘Fall’ was probably the hardest film I ever made,” said

Mann. Could he avoid a redux?

|

| Fall Movie |

The solution, he realized, just might be a project he’d

been developing in tandem with the film: artificially intelligent software that

could edit footage of the actors’ faces well after principal photography had

wrapped, seamlessly altering their facial expressions and mouth movements to

match newly recorded dialogue.

It’s a deceptively simple use for a technology that

experts say is poised to transform nearly every dimension of Hollywood, from

the labor dynamics and financial models to how audiences think about what’s

real or fake.

Artificial intelligence will do to motion pictures what

Photoshop did to still ones, said Robert Wahl, an associate computer science

professor at Concordia University Wisconsin who’s written about the ethics of

CGI, in an email. “We can

no longer fully trust what we see.”

3) Making the

Impossible Possible in Film Production with AI :

Artificial intelligence in filmmaking might sound

futuristic, but we have reached this place. Technology is already making a

significant impact on film production. Today, most of the outperforming movies

that come under the visual effects category are using machine learning and AI

for filmmaking. Significant pictures like ‘The Irishman’ and ‘Avengers:

Endgame’ are no different. It won’t be a wonder if the next movie you watch is

written by AI, performed by robots, and animated and rendered by a deep

learning algorithm. But why do we need artificial intelligence in filmmaking?

In the fast-moving world, everything has relied on technology. Integrating

artificial intelligence and subsequent technologies in film production will

help create movies faster and obtain more income. Besides, employing technology

will also ease almost every task in film industry.

Let us have a look at the applications of AI in film

production

|

| How-Ai-is-used-in-movies |

Writing scripts:

‘Artificial intelligence writes a story is what happens

here. Humans can imagine and script amazing stories, but they can’t assure that

they will perform well in the theatres. Fortunately, AI can. Machine learning

algorithms are fed with large amounts of movie data, which analyses them and

comes up with unique scripts that the audience love. In 2019, comedian and writer Keaton Patti

used an AI bot to generate a batman movie script. In 2016, AI wrote the script

for a 10-minute short film, Sunspring. The model was trained on scripts from

the 1980s and 1990s. The AI.

Simplifying pre-production:

Pre-production is an important but stressful task.

However, AI can help streamline the process involved in pre-production. AI can

plan schedules according to actor’s and others’ timing, and find apt locations

that will go well with the storyline.

Vault’s RealDemand AI platform analyses thousands of key elements of the

story, outline, script, castings, and trailer to maximise ROI 18 months before

a film’s release by factoring in release date, country, audience age etc.

|

| Movies on Artificial Intelligence |

Character making:

Graphics and visual effects never fail to steal people’s

heart. Digital domain applied machine learning technologies are used to design

amazing fictional characters like Thanos of Avengers: Infinity War. Filmmakers today use CGI to bring dead actors

back to life on screen. For example, two beloved Star Wars characters, Carrie

Fisher (Princess Leia) and Peter Cushing (Grand Moff Tarkin) were recreated in

Rogue One (the makers used CGI to make the actors look exactly like in the 1977

Star Wars: A New Hope). Carrie Fisher died before completing her scenes for

Episode 9: The Last Jedi and CGI was used to complete her story. In the Fast

and Furious franchise, the late Paul Walker was virtually recreated to finish

his scenes.

Subtitle creation:

Global media publishing companies have to make their

content suitable for viewers from different regions to consume it. In order to

deliver video content with multiple language subtitles, production houses can

use AI-based technologies like Natural language generation and natural language

processing.

For example, Star Wars has been translated into more than

50 languages to date. However, you still need humans in the loop to ensure the

subtitles are accurate.

Movie Promotion:

To confirm that the movie is a box-office success, AI can

be leveraged in the promotion process. AI algorithm can be used to evaluate the

viewer base, the excitement surrounding the movie, and the popularity of the

actors around the world.

.jpg) |

| Future movie editing Scene by A. I. Controlled Robot |

Movie editing:

In editing feature-length movies, AI supports the film

editors. With facial recognition technology, an AI algorithms can recognize the

key characters and sort certain scenes for human editors. By getting the first

draft done quickly, editors can focus on scenes featuring the main plot of the

script.

If you see a movie over the holidays, an A.I. might have

helped create it.

Will you be able to tell? Would it matter?

4) Machine Learning

Healthcare Applications – 2018 and Beyond In the field of Medicine :by Daniel

Faggella

|

| A.I. Controlled Robot Doctor examining Patience |

Since early 2013, IBM’s Watson has been used in the medical field, and after winning an astounding series of games against with world’s best living Go player, Google DeepMind‘s team decided to throw their weight behind the medical opportunities of their technologies as well.

Many of the machine learning (ML) industry’s hottest

young startups are knuckling down significant portions of their efforts to

healthcare, including Nervanasys (recently acquired by Intel), Ayasdi (raised

$94MM as of 02/16), Sentient.ai (raised $144MM as of 02/16), Digital Reasoning

Systems (raised $36MM as of 02/16) among others.

Current Machine Learning Healthcare Applications

The list below is by no means complete, but provides a

useful lay-of-the-land of some of ML’s impact in the healthcare industry.

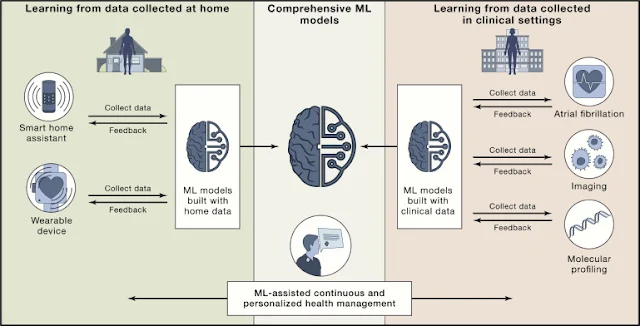

|

| . How Machine Learning Applications Could Help Individuals Maintain Health |

|

| Integrating Data and Machine Learning Models for Continuous and Personalized Health Management |

Diagnosis in Medical Imaging:

Computer vision has been one of the most remarkable

breakthroughs, thanks to machine learning and deep learning, and it’s a

particularly active healthcare application for ML. Microsoft’s InnerEye

initiative (started in 2010) is presently working on image diagnostic tools,

and the team has posted a number of videos explaining their developments,

including this video on machine learning for image analysis: Deep learning will

probably play a more and more important role in diagnostic applications as deep

learning becomes more accessible, and as more data sources (including rich and

varied forms of medical imagery) become part of the AI diagnostic process.

However, deep learning applications are known be limited

in their explanatory capacity. In other words, a trained deep learning system

cannot explain “how” it arrived at it’s predictions – even when they’re

correct. This kind of “black box problem” is all the more challenging in

healthcare, where doctors won’t want to make life-and-death decisions without a

firm understanding of how the machine arrived at it’s recommendation (even if

those recommendations have proven to be correct in the past).

For readers who aren’t familiar with deep learning but

would like an informed, simplified explanation, I recommend listening to our

interview with Google DeepMind’s Nando de Freitas.Treatment Queries and

Suggestions

Treatment Queries and Suggestions:

Diagnosis is a very complicated process, and involves –

at least for now – a myriad of factors (everything from the color of whites of

a patient’s eyes to the food they have for breakfast) of which machines cannot

presently collate and make sense; however, there’s little doubt that a machine

might aid in helping physicians make the right considerations in diagnosis and

treatment, simply by serving as an extension of scientific knowledge.

That’s what Memorial Sloan Kettering (MSK)’s Oncology

department is aiming for in its recent partnership with IBM Watson. MSK has

reams of data on cancer patients and treatments used over decades, and it’s

able to present and suggest treatment ideas or options to doctors in dealing

with unique future cancer cases – by pulling from what worked best in the past.

The kind of an intelligence-augmenting tool, while difficult to sell into the

hurly-burly world of hospitals, is already in preliminary use today.

Scaled Up /

Crowdsourced Medical Data Collection

There is a great deal of focus on pooling data from

various mobile devices in order to aggregate and make sense of more live health

data. Apple’s ResearchKit is aiming to do this in the treatment of Parkinson’s

disease and Asperger’s syndrome by allowing users to access interactive apps

(one of which applies machine learning for facial recognition) that assess their

conditions over time; their use of the app feeds ongoing progress data into an

anonymous pool for future study.

IBM is going to great lengths to acquire all the health

data it can get its hands on, from partnering with Medtronic to make sense of

diabetes and insulin data in real time, to buying out healthcare analytics

company Truven Health for $2.6B.

Despite the tremendous deluge of healthcare data provided

by the internet of things, the industry still seems to be experimenting in how

to make sense of this information and make real-time changes to treatment.

Scientists and patients alike can be optimistic that, as this trend of pooled

consumer data continues, researchers will have more ammunition for tackling

tough diseases and unique cases.

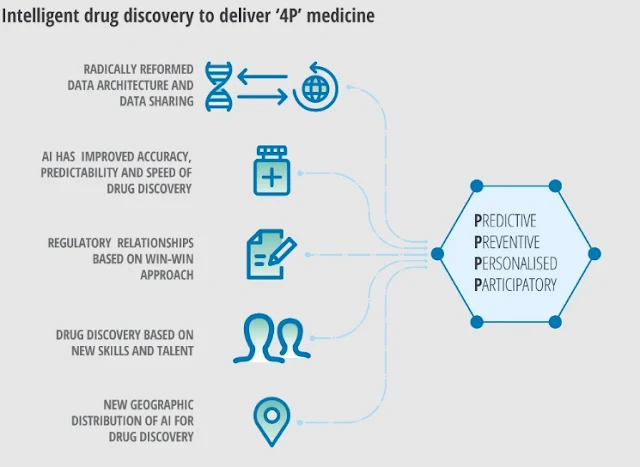

Drug Discovery:

While much of the healthcare industry is a morass of laws

and criss-crossing incentives of various stakeholders (hospital CEOs, doctors,

nurses, patients, insurance companies, etc…), drug discovery stands out as a

relatively straightforward economic value for machine learning healthcare

application creators. This application also deals with one relatively clear

customer who happens to generally have deep pockets: drug companies.

IBM’s own health applications has had initiatives in drug discovery since it’s early days. Google has also jumped into the drug discovery fray and joins a host of companies already raising and making money by working on drug discovery with the help of machine learning.

We’ve covered drug discovery and pharma applications in

greater depth elsewhere on Emerj. Many of our investor interviews (including

our interview titled “Doctors Don’t Want to be Replaced” with Steve Gullans of

Excel VM) feature a relatively optimistic outlook about the speed of innovation

in drug discovery vs many other healthcare applications (see our list of

“unique obstacles” to medical machine learning in the conclusion of this

article).

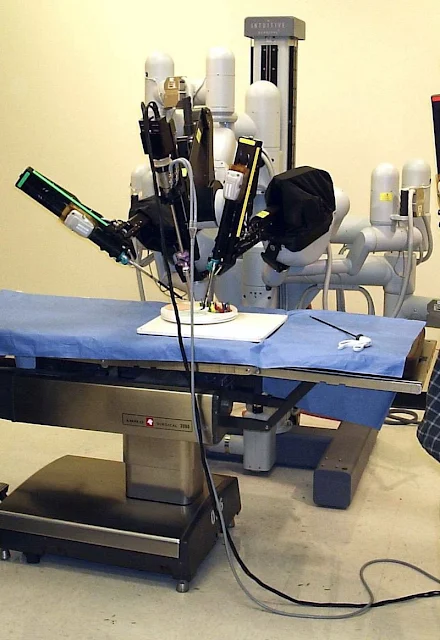

Robotic Surgery:

The da Vinci robot has gotten the bulk of attention in

the robotic surgery space, and some could argue for good reason. This device

allows surgeons to manipulate dextrous robotic limbs in order to perform

surgeries with fine detail and in tight spaces (and with less tremors) than

would be possible by the human hand alone. Here’s a video highlighting the

incredible dexterity of the Da Vinci robot:

While not all robotic surgery procedures involve machine

learning, some systems use computer vision (aided by machine learning) to

identify distances, or a specific body part (such as identifying hair follicles

for transplantation on the head, in the case of hair transplantation surgery).

In addition, machine learning is in some cases used to steady the motion and movement

of robotic limbs when taking directions from human controllers.

|

| The da Vinci Surgical System: |

|

| The da Vinci Surgical System: |

It was used in an estimated 200,000 surgeries in 2012,

most commonly for hysterectomies and prostate removals. The system is called

"da Vinci" in part because Leonardo da Vinci's study of human anatomy

eventually led to the design of the first known robot in history

The system has been used in the following procedures:

Radical prostatectomy, pyeloplasty, cystectomy,

nephrectomy and ureteral reimplantation;

Hysterectomy, myomectomy and sacrocolpopexy;

Hiatal hernia and inguinal hernia repair;

GI surgeries including resections and cholecystectomy;

Transoral robotic surgery (TORS) for head and neck cancer

Lung transplantation, the da Vinci System has been used

in the world's first fully robotic surgery of this kind thanks to a pioneering

technique

The da Vinci System consists of a surgeon's console that

is typically in the same room as the patient, and a patient-side cart with

three to four interactive robotic arms (depending on the model) controlled from

the console. The arms hold objects, and can act as scalpels, scissors, bovies,

or graspers. The final arm controls the 3D cameras. The surgeon uses the

controls of the console to manoeuvre the patient-side cart's robotic arms. The

system always requires a human operator.

Autonomous Robotic

Surgery:

At present, robots like the da Vinci are mostly an

extension of the dexterity and trained ability of a surgeon. In the future,

machine learning could be used to combine visual data and motor patterns within

devices such as the da Vinci in order to allow machines to master surgeries.

Machines have recently developed the ability to model beyond-human expertise in

some kinds of visual art and painting: If a machine can be trained to replicate

the legendary creative capacity of Van Gough or Picaso, we might imagine that

with enough training, such a machine could “drink in” enough hip replacement

surgeries to eventually perform the procedure on anyone, better than any living

team of doctors. The IEEE has put together an interesting write-up on

autonomous surgery that’s worth reading for those interested.

Improving

Performance (Beyond Amelioration):

Orreco and IBM recently announced a partnership to boost

athletic performance, and IBM has set up a similar partnership with Under Armor

in January 2016. While western medicine has kept its primary focus on treatment

and amelioration of disease, there is a great need for proactive health

prevention and intervention, and the first wave of IoT devices (notably the

Fitbit) is pushing these applications forward.

One can imagine that disease prevention or athletic

performance won’t be the only applications of health-promoting apps. Machine

learning may be implemented to track worker performance or stress levels on the

job, as well as for seeking positive improvements in at-risk groups (not just

relieving symptoms or healing after setbacks).

The ethical concerns around “augmenting” human physical

and (especially) mental abilities are intense, and will likely be increasingly

pressing the coming 15 years as enhancement technologies become viable.

Online Movies

Online Movies

No comments:

Post a Comment